Actually I never had any issues with the files inside the vaults. It is windows explorer that is causing the issues with the encrypted files on disk IF those end up somewhere that exceed 260 characters. Here is a more detailed example of what I mean, based on my actual situation:

Consider the below folder structure on disk. A dropbox folder on disk root (to minimize file paths), then a folder structure which cannot be shortened any more, then the actual placement of the vault with all the encrypted files in it:

E:\Dropbox\somefoldername111\somefoldername222\somefoldername333\MyVAULTname\d\7K\57RUFKAASNWHSPH5KX3FL2R3RIWRFA\QT2P3VC323BSE33BLFYH5ZSPLVBN455NYR6B34L2KF2LT3L45J2CFC7P5FMBRQPEXFTW5M7QH7QZSLMOR2LFJDJH5CLD4R7VWCCCV3CDLNLGATV74ORDBDANHZ5XWDP4

This file has a total path length of 242. The first 77 characters is the part of the path that leads up to the encrypted vault, and the remaining 164 characters of the path are taken by the encrypted vault file.

Cryptomator makes sure this second part of the path never exceeds a threshold by shortening the file/folder names where needed. However, the first part of the path that leads up to the encrypted vault on disk is outside of the control of cryptomator (as it should be).

But if the user moves the encrypted vault (or renames one of the containing folders) and makes the first part of the path to be extended, some of the encrypted files will inadvertently be pushed outside the 260 character limit and will usually either:

a) disappear in windows explorer, which in this case (I just realized) shouldn’t be much of a problem as a user should not even go there to view the encrypted files anyway.

b) might become inaccessible by other programs, including backup or cloud sync programs. This could cause partial backups that will mess the encrypted folder structure and cause files to be lost. The user will be unaware of these partial backups or partial cloud syncs until he tries to restore vaults and notice that files are missing.

For example, if in the above example path we moved dropbox to it’s default location on windows desktop, the new longer path would be this (without changing anything in the vault itself):

C:\Users\someUsername\Desktop\Dropbox\somefoldername111\somefoldername222\somefoldername333\MyVAULTname\d\7K\57RUFKAASNWHSPH5KX3FL2R3RIWRFA\QT2P3VC323BSE33BLFYH5ZSPLVBN455NYR6B34L2KF2LT3L45J2CFC7P5FMBRQPEXFTW5M7QH7QZSLMOR2LFJDJH5CLD4R7VWCCCV3CDLNLGATV74ORDBDANHZ5XWDP4

This new path has a length of 269, which means this specific file along with many others will now become inaccessible by many programs as described above. These files can potentially be lost if/when the user restores a backup etc.

For this reason I always assumed it is safer to shorten the encrypted file names as much as possible in order to provide more available path length to the user for naming folders, to avoid accidentally pushing the encrypted files over the 260 limit when moving stuff around or renaming folders.

I.e. this part of the path takes almost 2/3rds of the max path limit:

\d\7K\57RUFKAASNWHSPH5KX3FL2R3RIWRFA\QT2P3VC323BSE33BLFYH5ZSPLVBN455NYR6B34L2KF2LT3L45J2CFC7P5FMBRQPEXFTW5M7QH7QZSLMOR2LFJDJH5CLD4R7VWCCCV3CDLNLGATV74ORDBDANHZ5XWDP4

Will this become even longer in the new proposed file structure? If yes, that would leave much fewer characters for the user-defined part of the path and it would become very easy to push files over the limit of 260 characters when moving stuff around.

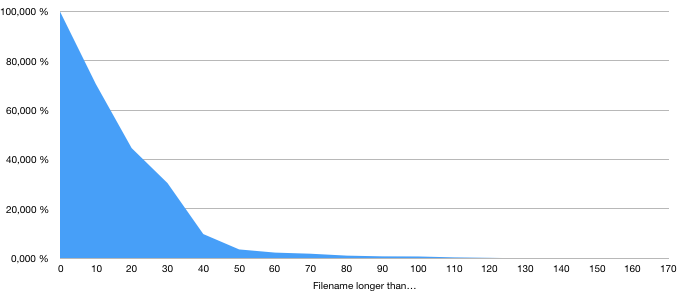

Here are the results:

Here are the results: